-

Sales +603 2770 2833

- sales@webserver.com.my

Why is having duplicate content an issue for SEO?

Table of Contents

Toggle

In the digital age, content is king, and a well-optimized website can make all the difference in attracting and retaining visitors. However, not all content is created equal. One common pitfall that can significantly hinder your site’s performance is duplicate content. Duplicate content refers to blocks of text that appear across multiple pages either on the same site or across different domains. While it might seem harmless, the repercussions for your search engine optimization (SEO) can be severe. To prevent this, owners should identify duplicate content using various tools and implement strategies that fix duplicate content issues.

Search engines strive to deliver the best and most relevant results to users, and they frown upon content that appears in more than one place. This practice can lead to confusion for search engines and diminish your site’s credibility and manipulate ranking. In this post, we will explore why duplicate content poses a problem for SEO, how it impacts your site’s visibility, and what steps you can take to ensure your content remains unique and valuable to both search engines and users. Let’s dive into the intricacies of duplicate content and how you can navigate this challenging aspect of SEO.

Impact of Duplicate Content on SEO

How Search Engines Handle Duplicate Content

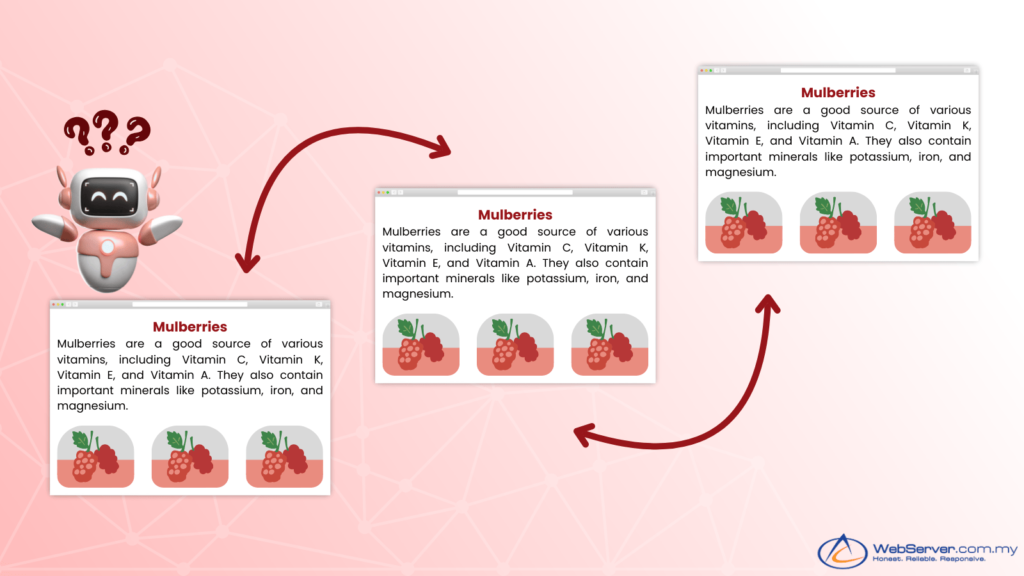

Search engines like Google aim to provide users with the most relevant and unique results for their queries. When they encounter duplicate content, they have to decide which version is the most relevant to display. This often results in google’s algorithms consolidating the duplicate content and choosing one version to display while filtering out the others. This process can lead to significant issues if the version chosen is not the one you intended to rank or if your valuable content is overlooked.

Negative Effects on Search Engine Rankings

Duplicate content can dilute the value of your content and your website’s authority. When other sites contain the same or similar content, search engines may struggle to determine which original page to prioritize. This can lead to:

- Reduced Visibility: The chances of any one of the duplicate pages ranking high in search results decreases.

- Lower Page Authority: Instead of consolidating link equity (the value from inbound links) to one page, duplicate content spreads it thin across multiple pages, weakening the overall authority.

- Confusing Signals: Search engines may receive mixed signals about which page to index and rank, leading to inconsistencies and lower rankings.

Potential Penalties from Search Engines

While search engines do not typically impose penalties for duplicate content in the form of direct demotion, the indirect consequences can be just as damaging. Own sites with significant duplicate content might experience:

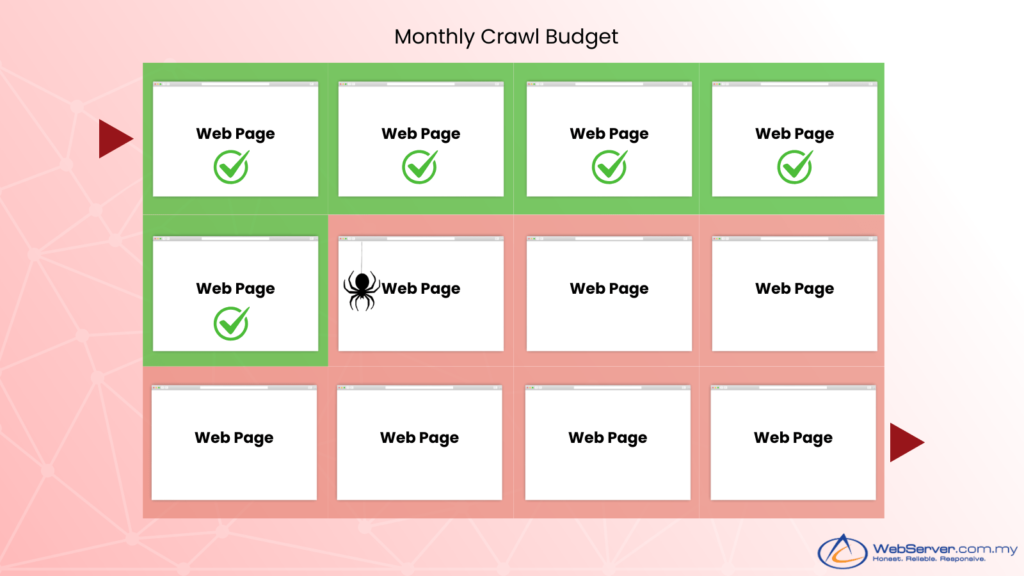

- Decreased Crawl Efficiency: Search engine bots may waste crawl budget (the number of pages they crawl and index on your site within a given time) on duplicate pages, leading to important pages being overlooked.

- Reduced Trust: Persistent issues with duplicate content can affect the trust and credibility of your site in the eyes of search engines and not deceive users.

- Algorithmic Filtering: In some cases, search engines may algorithmically down-rank your content if they suspect manipulative practices or poor content management.

User Experience

Impact of Duplicate Content on User Experience

The primary goal of any web page should be to provide a seamless and valuable experience for its visitors. Duplicate content can severely undermine this objective by presenting users with repetitive or redundant information. When users encounter the same content multiple times, it can diminish their trust and engagement with the site. Here are a few ways duplicate content can negatively impact user experience:

- Perceived Value: When users see the same content repeated across different pages, they may question the quality and originality of your site. This can lead to a perception that the site lacks substance, which can drive users away.

- Content Relevance: Users typically search for specific information. If they repeatedly encounter the same content without finding new, relevant information, they can become frustrated and leave the site to find more valuable resources elsewhere.

Confusion and Frustration

Duplicate content can also cause confusion and frustration for users in several ways:

- Navigation Issues: If users are redirected to different pages containing the same content, they might struggle to find the unique information they are seeking. This can make navigation cumbersome and discourage further exploration of your site.

- Search Engine Results: When search engines display multiple versions of the same content in search results, users might click through several links value only to find the same material. This repetition can waste their time and lead to a poor search experience, reducing the likelihood of them returning to your site in the future.

Crawling and Indexing Issues

Wasting Crawl Budget

Search engines use a process called crawling to discover and index new content on the web. However, each website has a limit on how many pages a search engine bot will crawl, known as the “crawl budget.” Duplicate content can waste this valuable resource by making search engine bots repeatedly crawl the same or similar pages. When bots spend time crawling duplicate pages, it reduces the time and resources they can allocate to discovering new and unique content on your site. This inefficiency means that your most important and up-to-date pages might not get crawled and indexed as quickly or as frequently as necessary.

Overlooking Important Pages

When duplicate content is prevalent on a website, it can lead to important pages being overlooked during the crawling process. Here’s how this can happen:

- Prioritization Issues: Search engines prioritize crawling and indexing pages they perceive as valuable and unique. If a significant portion of your site contains duplicate content, search engines might prioritize crawling other parts of your site or even other websites altogether, leaving your critical pages unnoticed.

- Indexing Delays: Duplicate content can cause delays in indexing because search engines need to spend additional time and resources determining which version of the content to index. This delay can be detrimental, especially if you frequently update your content or rely on timely information to engage your audience.

- Missed Opportunities: Important pages that are not crawled efficiently may miss out on ranking opportunities. If search engines fail to discover and index your valuable pages, those pages won’t appear in search results, limiting your visibility and potential traffic.

Content Ownership and Theft

Issues Related to Content Theft and Scraping

In the digital age, the ease of accessing and sharing information has led to widespread issues related to content theft and scraping. Content theft occurs when someone copies your original content without permission and publishes it as their own. Scraping, on the other hand, involves the use of automated tools to extract content from websites, often without authorization. These practices can have several negative consequences for your site:

- Loss of Originality: When your content is copied and republished elsewhere, it loses its uniqueness. Search engines may have difficulty determining the original source, potentially attributing higher rankings to the copied content instead of yours.

- Traffic Diversion: Stolen content can divert traffic away from your site. Users who find the scraped content on other platforms might never visit your original site, leading to a loss of potential audience and revenue.

- Damage to Reputation: If your content appears on low-quality or spammy sites, it can harm your brand’s reputation. Users may associate your content with these undesirable sites, affecting their perception of your credibility and professionalism.

Importance of Protecting Original Content

Protecting your original content is crucial for maintaining your site’s integrity, reputation, and SEO Strategy performance. Here are a few reasons why safeguarding your content is essential:

- SEO Benefits: Unique and original content is a key factor in achieving high search engine rankings. By protecting your content from theft, you ensure that search engines recognize your site as the authoritative source, which can improve your visibility and rankings.

- Brand Integrity: Original content reflects your brand’s voice, expertise, and values. By safeguarding your content, you maintain your brand’s integrity and ensure that your audience receives information that truly represents your brand.

- Legal Protection: Protecting your content helps you enforce your legal rights. By monitoring and addressing instances of content theft, you can take appropriate action against infringers, such as issuing takedown notices or pursuing legal remedies.

Fix duplicate content issues

Utilizing Google Search Console is essential for website owners to monitor and manage issues related to both internal duplicate content and external duplicate content. When you encounter more than one URL pointing to the same URL due to URL parameters, it’s important to implement a self-referencing canonical tag on your canonical URL. This strategy helps clarify to search engines which version should be prioritized in the search results page.

Additionally, optimizing your HTML code within your content management system ensures that internal links function correctly, especially on category pages where similar content might exist across multiple locations.

Conclusion

In conclusion, duplicate content poses several significant challenges for SEO and user experience. It can dilute your site’s authority, cause navigation issues, frustrate users, and waste valuable crawl budget. Search engines may struggle to determine which version of content to prioritize, leading to reduced visibility and potential penalties. Moreover, content theft and scraping can harm your brand’s integrity and divert traffic away from your site. Implementing proper techniques, including specifying which particular page should be prioritized, tells search engines which version to index. This ultimately improves overall site performance and user experience while mitigating risks associated with other sites that may be linked to similar content, which might help your website rank better on Google.

Website owners must understand canonical pages and meta descriptions to manage duplicate content SEO, especially when multiple URLs lead to similar pages on ecommerce sites with session IDs and tracking parameters. Utilizing a canonical tag helps to signal to search engines which duplicate version should be prioritized, thus aiding in the effort to identify duplicate content issues.

To maintain a strong online presence, it’s essential to prioritize unique, high-quality content. Site owners must address the issue of multiple versions of the same page on the same website to effectively manage duplicate content issues. By avoiding duplicate content and protecting your original material, you can enhance your site’s SEO performance and ensure a positive experience for your users. Implementing best practices and using tools to detect and manage duplicate content will help you achieve sustained success in the competitive digital landscape. Finding a good SEO service can also help you avoid duplicate content SEO. Remember, unique content is not just recommended—it’s crucial for building trust with both search engines and your audience.

WebServer.com.my, a business unit of a privately owned Acme Commerce Sdn Bhd was established in 1989. Specializes in the complex managed hosting services such as database hosting and mission critical application hosting since 1999.

-

Office Hour

+603 2770 2833 -

Extended

+603 2770 2803 -

Email

sales@webserver.com.my -

Technical Support

support@webserver.com.my

Switch The Language